The Disabled Face AI-Driven Exclusion — Despite Huge Market Potential

~8 min read

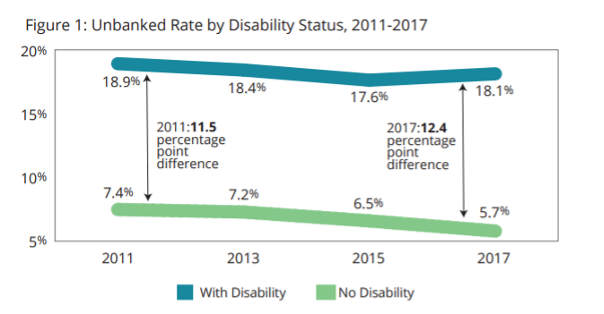

The disability community, despite possessing over $1.2 trillion in annual disposable income, remains highly unbanked and underbanked across the globe. A 2017 FDIC survey on the unbanked and the underbanked found that 18% of U.S. households with a disability were unbanked, compared to 5.7% of households without a disability. Naturally, some fintechs have sought to broaden access to this underserved segment, wielding the technological capabilities common in the sector to do so, like artificial intelligence (AI). Yet the exclusion and discrimination resulting from such AI-powered services highlight the contradiction inherent to data-fueled, machine learning-powered fintech products: “personalization” methods may fail to account for demographic exceptions so that those requiring the most tailored solutions are instead excluded altogether.

Making The Impossible Possible

Despite the vast numbers of those facing disabilities — well over one billion worldwide — the segment still remains chronically underserved, owing to a lack of attention and limited knowledge on how to serve their financial needs. A cursory search on financial service providers focused primarily on serving people with disabilities will reveal very few of them — perhaps Purple Financial or True Link Financial, who respectively provide mobile banking and financial services in the US. Yet this certainly is not due to a lack of need for tech-enabled solutions oriented around the disabled community.

“For people without disabilities, technology makes things easier. For people with disabilities, technology makes things possible.”

Tom Foley, Executive Director, National Disability Institute

True Link Financial demonstrates the potential when focusing on people with disabilities — and their caregivers and families — through their Visa card and investment management offerings. It targets caregivers and professional fiduciaries by providing configurable Visa cards that enable monitoring of individuals’ expenditure, allowing individual autonomy while ensuring safekeeping from risky purchases and scams.

Source: National Disability Institute

Consider, for instance, a person with Alzheimer's who keeps purchasing hair ties multiple times a day due to memory loss. Rather than keeping them away from the grocery store, limiting their independence, their caregivers could simply limit the amount of money spent at the local grocery store and set up alerts for the person’s latest expenditures.

Though disabled-oriented fintechs tailor common tech solutions to the specific needs of the disabled community, the path to customer acquisition differs from other financial service providers (FSPs). According to Kai Stinchcombe, CEO of True Link Financial, people initially search for assistive products, like a wheelchair or a hearing aid — not a banking service or insurance. Yet financial products or services focused on people with disabilities may be categorized under assistive technologies, which can more directly attract relevant demographics but require different marketing, distribution and outreach strategies.

As suggested by the low number of FSPs catering exclusively to the disabled, there are other hurdles that can prove difficult to overcome. Apart from the typical regulatory hurdles that fintechs face, disability-oriented fintechs face operational complexities such as sourcing knowledgeable employees, and entrepreneurs also need to conduct resource-heavy research and development, as the market is characterized by a lack of comprehensive data.

To a certain degree, FSPs that serve a wider range of people have also taken to ensuring their platforms are accessible, thus contributing to the financial inclusion of people with disabilities. However, the range of accessibility provided by these FSPs remains lacking. Results from a digital accessibility audit conducted among top US banks revealed that none of the banks scanned met the Web Content Accessibility Guidelines (WCAG) 2.1, despite including accessibility options on their sites. While they may have included technical accessibility in the form of text-to-speech options, captioning, relay calls and online chat options, the language used remains more difficult to understand than what is considered the average reading level. Accessibility features for mainstream FSPs are also typically focused on particular disabilities, including visual and auditory impairments, leaving out numerous cognitive, behavioral and physical disabilities. An individual with memory loss or schizophrenia, for instance, would not find assistive technology very helpful.

Accessibility remains a top concern for many FSPs, driving them to turn towards AI in a bid to expand their assistive technologies and servicing of disabled clientele, as well as to improve decision-making as a whole. The use of AI in the financial sector does, however, bring with it critical issues, including algorithmic bias, privacy issues and a de-emphasis of live customer service — all of which reinforce the disadvantages the underserved disability community must grapple with.

Excluding The Exceptions

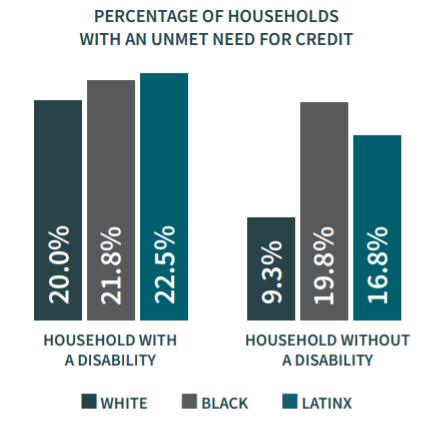

Those with disabilities are a heterogeneous group facing different realities and issues while cutting across ethnicities, sexual orientation, and economic statuses. Utilizing AI to infer patterns and serve people with disabilities would therefore require a degree of personalization that just may not be possible within the current data limits that exist. The intersectionality between race and disability, for instance, has not been adequately investigated. Algorithms often choose to exclude people of color when assigning interest rates for certain loan facilities due to a historical lack of access to opportunities that could improve their credit scores. Data on race, disability and credit access illustrate inequalities in bank credit, yet with disabled groups being underserved across the board:

Source: National Disability Institute

Under AI-driven credit solutions, such inequalities are transformed into algorithmic bias. Such biases emanate from structural barriers that have for ages hindered disabled people’s participation in the labor market, education and finance sectors, which are compounded by race-based and gender-based discrimination yet face fewer options for data-based recourse.

“There are some very large data sets out there that look at race, ethnicity, gender, but they never ask if somebody has a disability. There is still a paucity of data that can be broken down by disability.”

Tom Foley, Executive Director, National Disability Institute

Without key data points that shed more light on disability, AI systems are more likely to exhibit biases either by excluding disabled people or inaccurately classifying them. A 2021 research paper provides a common scenario in which very few people with disabilities are included in a large dataset. A disabled person may subsequently be classified as outliers unfit for proper monetization, effectively marginalizing them.

This lack of adequate data is further aggravated by a lack of knowledge among employees of numerous financial institutions regarding inclusive protocols of people with disabilities or even how to collect data from them. The ‘disability question,’ as referred to by Tom Foley, is necessary when collecting data, yet it requires both intentionality and knowledge to yield gainful results that take into consideration the spectrum of disability and unique experiences of persons within the spectrum. A bank servicing several people with mental disabilities may find that one requires greater supervision from a caregiver in their use of funds, while another only requires limitations in specific areas — such as limited purchases at the local store.

Mo’ Data… Mo’ Problems?

What may seem like an obvious remedy is to gather more disability-specific data. If private firms were to gather granular data on people with disabilities, delving deeply enough to break down the data by disability type and gather key insights needed to improve machine learning systems, then wouldn’t the data problem be solved?

Such an approach brings with it a myriad of challenges. Data privacy becomes an even more pressing issue, owing to the highly individualized data gathering this would require. As it stands, many of the assistive technologies in the market often disclose information about one’s condition before they have chosen to disclose it to the sites they visit. Once this information forms part of a person’s digital footprint, it may lead to their exclusion in critical areas, including access to job opportunities and financial services, such as loans, according to the European Disability Forum.

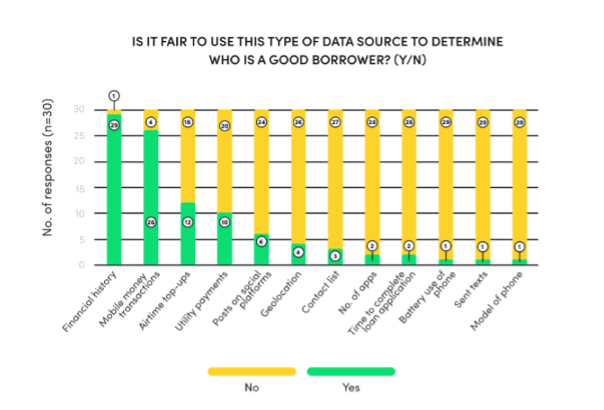

The issue is especially acute when it comes to the collection of alternative data for lending and credit scoring. The Centre for Financial Inclusion points out that there is an array of data points for alternative data collection, including the person’s phone type, their airtime top-up history, social media posts, texts messages sent out and more. A survey conducted on Rwandans revealed that once they understood the kind of data that some digital lenders were collecting, they did not find that level of data collection fair.

Source: Centre for Financial Inclusion

Risks of potentially identifying a person through the data are considerable, yet more so regarding the disabled. Even when the data is anonymized, details of a particular disability coupled with location data may be enough to identify one to scammers on the Internet in the case of data leaks or hacking. FSPs may also exploit lax regulations to collect more data than the consumers are aware of.

“Many data protection frameworks and regulations rely on the idea of ‘notice and consent,’ which puts the onus of govering privacy on the consumer. This can mean that if a provider notifies you on how they are using your data, which is typically in fine print and legalese — which most people scroll through — and the consumer consents, then the provider’s work is done.”

Alexandra Rizzi, Senior Director, Centre for Financial Inclusion (CFI)

Notice and consent is more likely to negatively affect people from marginalized communities and low-income areas, where the gap between the consumers’ understanding of how their data is used and the actual use of this data is extremely large. According to research from the Centre for Financial Inclusion, in countries within the global south, such as Indonesia and Rwanda, people’s knowledge of how their data is used is very limited. This knowledge gap particularly exposes people with disabilities within these areas to a higher risk of data misuse. In a world where data is king for many tech-based firms, many companies simply bypass ethical considerations and collect data at the expense of vulnerable parties.

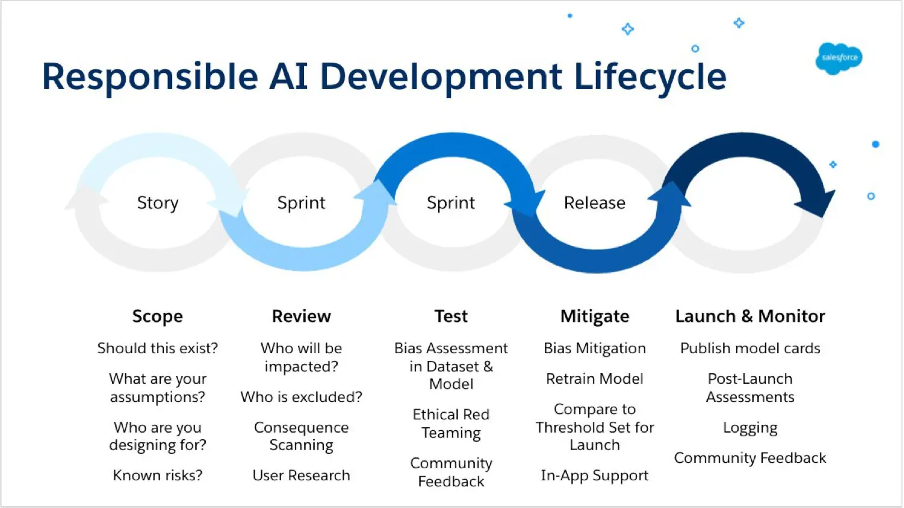

To counter this, Rizzi suggests a privacy-by-design approach that encourages stakeholders to look beyond the box-check approach in which companies merely consider privacy at the end of the product development cycle. Under the new approach, financial service providers would incorporate privacy throughout the product development journey, checking for AI bias before a system is deployed for use.

Source: Infoworld

Left Behind — The Disabled, Or Companies?

Both Foley and Stinchcombe agree on the need for exception management protocols in product solutions, which often require a personal touch either in tandem with AI or on its own in enabling inclusivity. Foley, who has a visual impairment, speaks fondly of an Internet service provider whose customer service representatives demonstrated knowledge of software catering to the visually impaired. True Link’s Stinchcombe has also been intentional in keeping the human touch within customer services, enabling users to configure their True Link Visa cards according to their specific and variable needs.

As disability inclusion goes mainstream among digital financial service providers, the disability community — which includes friends and family of people with disabilities — is likely to turn to the more inclusive brands in the market. Aside from expanding their potential customer base, FSPs serving larger, more diverse consumer bases can improve their personalization capabilities with these lessons learned from disabilities-oriented solutions. A more intentional approach to creating and guiding algorithms that incorporate new data collected from the end-user — the disabled person — starting with the initiation of a product cycle will likely enable fairer treatment for people with disabilities — lessons that can and should be applied across the board.

Image courtesy of H Heyerlein

Click here to subscribe and receive a weekly Mondato Insight directly to your inbox.

Mondato Summit Africa 2022: Key Themes and Takeaways

What M-Pesa and Apple’s Empires Say About Platform Economies