ChatGPT: The Challenges And Opportunities Coming To Financial Services

~9 min read

It’s been decades since algorithmic trading transformed Wall Street with its high-frequency trading and years since the financial services industry began to integrate artificial intelligence in areas such as fraud detection, lending decisions and robo-advisory services. Yet the recent explosion of generative AI tools like ChatGPT — providing human-like text on seemingly any subject and any style so successfully it easily conquers the vaunted Turing Test — has opened the floodgates of possibilities. The advent of such a power language processor like ChatGPT — open source and available for public use — threatens to upend various parts of the financial services industry, spanning beyond areas such as chat bots and robo-advisors to even the workforce needed in something as skilled as coding. As artificial intelligence reaches a crucial tipping point — and AI bias lingers — whether the proper private and public controls are put in place ahead of the technology’s dizzying progress becomes an even more urgent task just as it becomes more challenging.

New News Is Old News

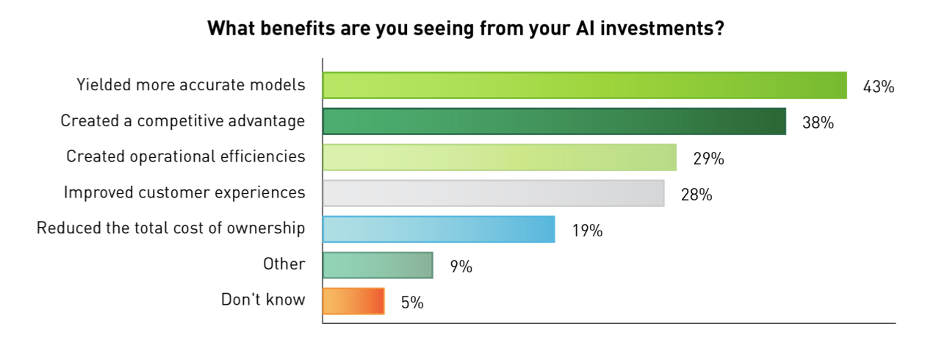

Even before ChatGPT entered the public consciousness, artificial intelligence had already transformed much of what financial services do. In a recent Nvidia survey, 78% of financial services companies said they use at least one kind of artificial intelligence tool. Regardless of the shape it takes, the benefits have been clear: over 30% of those surveyed by Nvidia said AI increased annual revenues by more than 10%, while over a quarter saw AI reduce costs by more than 10%.

Source: Nvidia

In broad strokes, AI is employed in financial services in four categories:

- fraud detection and compliance

- credit risk analysis

- chatbots and robo-advisers

- algorithmic trading

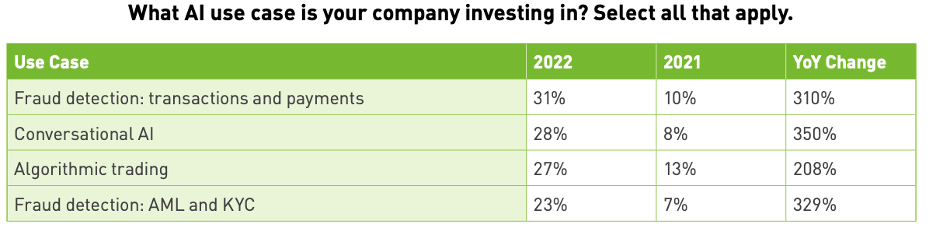

Each of these areas have had different starting points and rates of acceleration, but across the board, investment in AI grew tremendously in financial services this past year; though none of the use cases surveyed by Nvidia in 2021 saw more than 14% industry penetration in 2021, nine of the 13 use cases tracked in 2022 saw investment from more than 15% of the industry. Investing in AI is by now essentially a requisite to stay alive in financial services — especially as operational efficiency becomes only more crucial in the recession likely to come this year.

But it’s important to note that while even the laggards have jumped in on the AI revolution, it might be most appropriate to frame this ubiquity as one marked by some leaders surrounded by many who only possess a basic understanding of how their AI really functions — a challenge that grows as these AI models are fed more data to create more sophisticated algorithms relying on a number of interrelated variables often beyond the human comprehension.

“In surveys, banks will say, 'Oh, yeah, we do use AI to some degree.' But when they're asked, 'how well do you understand it?' There's not that same degree of response. It's one thing to apply it, but it's another thing entirely to go ahead and blindly apply a one-size-fits-all algorithm.”

Bonnie Buchanan, Director of Sustainable and Explainable Fintech (SAEF) Center, Surrey Business School

Talk Of The Town

According to the Nvidia survey, among the features that saw the biggest jump in investment in 2022 — which only saw ChatGPT’s entry in the last month — was conversational AI.

Source: Nvidia

Truly advanced conversational AI electrifies the public precisely because it ventures into cognitive fields so unlike the confined and programmed capabilities machines have until now been limited to. The nature of self-improving artificial intelligence programs naturally grows processing power and capabilities on an exponential curve, the classic example being the evolution of the computer. Nonetheless, ChatGPT’s mastery of language processing in styles ranging from 2nd grader to erudite scholar to even coding languages signal to experts like Buchanan not simply an acceleration of what’s come before, but a true alteration of the course we’ve been on — including in financial services.

In ChatGPT’s own words, the immediately apparent benefits ChatGPT will provide to financial services will be in areas such as automating customer service tasks and generating insights and analysis for banks to make better informed decisions while helping to detect fraud. Already, ChatGPT can do simple coding and debugging, with some estimating it will only take two to three more years for it to be able to perform actual banking coding.

Answering routing questions or queries will now be serviced by chatbots without the limitations as seen before, further freeing up space for human customer service agents to address more complex queries. This should also carry over to coding duties, which would free up time for coders to engage in higher-level pursuits.

How this impacts the workforce in these sectors is unclear. It could be another false alarm, or a true paradigm shift. Alberto Rossi, a professor at Georgetown University whose research focuses on robo-advisors, notes how the same fears were evoked when robo-advisors first emerged — concerns that proved unfounded as the democratization of financial advice dramatically expanded the market for financial advice so that more financial advisors were needed, though to provide a more human touch to complement advice provided by AI.

This next, potentially dramatic step in democratization might auger another expansion along these same lines, says Rossi, or it could manage to replace at least to some degree the domains of financial customer service previously best requiring the human touch; at minimum, however, it’s likely older demographics — which possess a disproportionate amount of wealth — will remain most comfortable with the human touch for the next decade or two. Considering the massive layoffs underway in swaths of fintech, however, ChatGPT’s capabilities might turbocharge such trends.

The impact such a powerful — and perhaps most importantly, open-sourced — language processor like ChatGPT should not be underestimated. On the business end, startups lacking in resources or data of scale suddenly have at their offering an advanced machine capable of analysis and communication in almost any style, any format, and for a wide variety of purposes. The advantage for Big Techs would subsequently lie squarely in their vast storage of data — an advantage that might also be at least minimized if the now-emerging synthetic data sector proves able in narrowing information gaps, conjectures Surrey’s Buchanan.

To be fair, Big Techs will also likely be seeing a massive jump in automated communication abilities in areas like customer service. Although it’s too early to know how the titan/ startup, legacy/ challenger dynamics will be impacted, the consumer will benefit either way — especially when it comes to providing access and the benefits it critically provides. Rossi believes it can prove vital in alleviating the shortcomings in financial literacy that plague the industry.

“The ones that are going to benefit the most are going to be the end consumers that right now are cut off from services because they don't have the kind of resources to pay for them. Financial advice [regarding] what kind of credit cards to use, how much to spend, how much to put aside every month, can be done fully in an automated way.”

Alberto Rossi, Director of the AI, Analytics and Future of Work Initiative, Georgetown University

Think Before Speaking

The rapid pace of AI’s progress naturally comes with uncertainty and more issues to emerge. AI tools assisting the financial decision-making for the disadvantaged can be a boon for the financially illiterate while also proving a crutch; how much worse are people at navigating in the world of Google Maps than before? The possibilities for unprecedented personalization can also nudge robo-advisors and related tools further into paternalistic directions that can lead to strangely rational economic outcomes compared to what we’ve come to expect from homo sapiens of the 21st century —if at the cost of free will or direct consent, à la Thaler’s nudging approach.

Trying to prevent the dystopian variations of what comes next in AI invariably comes with implementing the proper design and regulation. In the U.S., the second draft of the NIST’s AI Risk Management Framework (RMF) provides the most recent conceptual framework for “mapping”, “measuring”, “managing” and governing AI systems. The RMF views any trustworthy AI as providing several characteristics, all of which in an accountable and transparent manner:

- Valid and Reliable

- Safe

- Bias is managed

- Secure and resilient

- Explainable and interpretable

- Privacy-enhanced

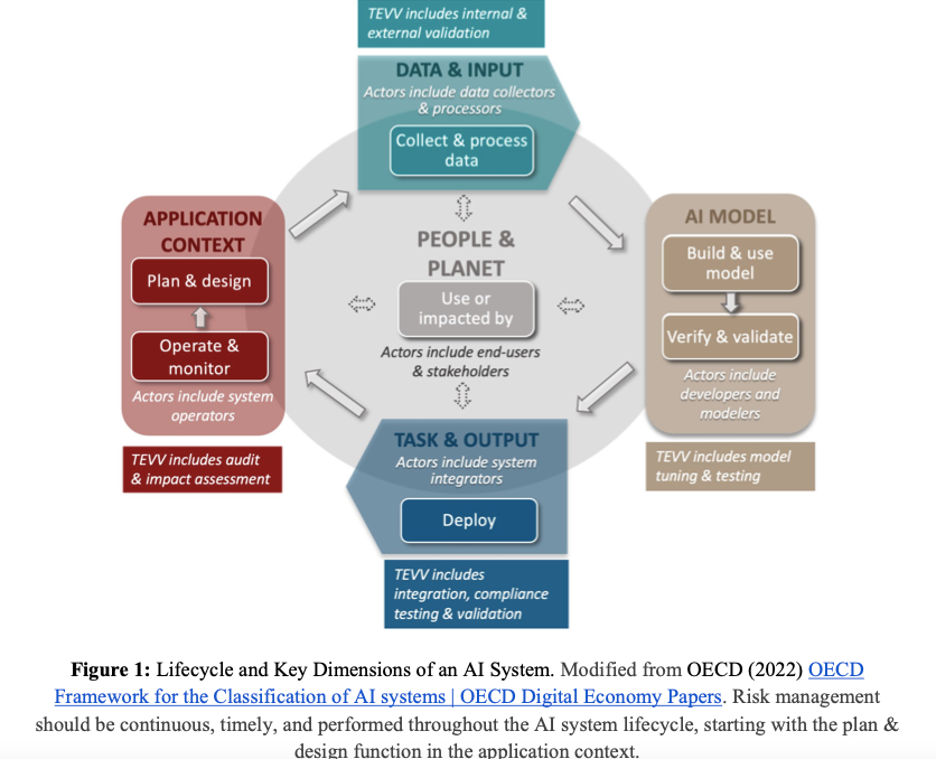

Measuring these factors requires testing, evaluation, verification, and validation (TEVV) in each of the stages: the “mapping” or planning stage, the “measuring” or development stage, and the “managing” stage after an AI has been deployed.

Source: NIST AI Risk Management Framework

Yet artificial intelligence provides novel issues for regulators that aren’t always easy to solve. Consider the area in financial services that’s elicited the most public discussion in these regards — preventing algorithmic bias in use cases such as automated lending decisions.

As Katja Langenbucher, professor of law at Goethe University in Frankfurt, describes in a recent paper, anti-discrimination law traditionally relied on chains of causation that AI-powered origination models challenge. While in the past the variables that went into a person’s FICO score were transparent, that isn’t the case in today’s world of increasingly sophisticated AI credit origination models. AI models powered by previous data sets mean that, in the words of Langenbucher, “yesterday’s world shapes today’s predictions” — giving rise to algorithmic bias that’s difficult to eliminate without throwing out the data sets altogether.

Yet today, AI-driven models have already expanded to incorporate variables far beyond what a normal human would consider relevant to the model — variables that nonetheless can serve as proxies to discriminate against certain groups in ways that are hard to discern.

Handling “proxy variables” that evade anti-discrimination measures isn’t a new challenge in legal systems; think of the poll taxes in the Jim Crow south in the U.S. that managed to circumvent civil rights protections for African Americans while disproportionately disenfranchising them, nonetheless. What is new, however, is the “proxy variables” included in AI models that (a) are no longer crafted by humans in a directly discriminatory manner, and (b) are so numerous that simply taking one “variable” out of the mix won’t really make a difference in automated decisions, leading some economists to conclude that we are reaching a situation where prohibiting certain variables to consider in automated lending will prove ineffective; AI will have become too sophisticated for regulators to pin down what’s happening within the black box algorithm.

Nonetheless, the first laws regulating AI are coming into shape. What is setting up to be the standard bearer in this respect is the EU’s proposed AI Act. In the words of Langenbucher, the AI Act seeks to treat AI models as products requiring regulation, providing a risk-based approach to how it oversees AI models in different sectors. Models that determine access to financial resources — like underwriting and scoring models — are placed in the high-risk category, which requires compliance in data governance, technical documentation and record-keeping, transparency, human oversight and checks on robustness, accuracy and cybersecurity.

The European Fintech Association came out in October in support of the AI Act, though it expressed worries of classifying credit assessment models as high-risk. Requiring such systems to be explainable and interpretable likewise will limit the robustness and accuracy of such systems, fintechs fear.

Viewing it as proactive legislation wisely framing AI models as a product with human rights implications, Langenbucher finds the AI Act to be a positive first step towards responsibly developing AI capabilities and sectors. Enforcement may still be tricky, however, especially as AI models proliferate in different financial segments.

“The question is: how standardized are enforcement mechanisms going to be? For [credit] scoring, enforcement depends on whether we are looking at a financial institution or not. If it is done by a non-bank, the more general, newly-to-be-established agencies will be in charge. I would have liked it all to be [overseen fully] by bank supervisors, even any form of [credit] scoring, because [otherwise] there is a risk of regulatory arbitrage and potentially inconsistent enforcement. That we don't know.”

Katja Langenbucher, Law Professor, Goethe University

Handing The Reins

Language has forged the building blocks of society until now: its laws, its culture, its norms, its commerce, and its economy. For the first time in our species’ existence, we have a glimpse of what it may look like when not only humans possess such capabilities with the dexterity and recall as we do —or even better. Whether humans can both cultivate and harness such machine power for the betterment of humanity is a question that of course extends far beyond the financial services domain. But let us remember how finance and economics were once manmade creations at the dawn of society, too, with its imperfections mirroring the characteristics of its creator and steward. Experiencing growth through turbulence, transformation through decay, and improvement following setback are hallmarks of financial history. As we hand greater reins of that stewardship to AI models of our own creation, these rules and patterns may stay the same — or what emerges may be something entirely unexpected and new. What is certain is that a new age is upon us.

Image courtesy of Deep Mind

Click here to subscribe and receive a weekly Mondato Insight directly to your inbox.

Africa’s Social Commerce Runners: Raising The Floor, But Lowering The Ceiling?

Is Financial Literacy the Missing Piece to African Insurtech?